Ema

Good evening 徐国荣, and welcome to your personalized edition of Goose Pod. I'm Ema. Today is Saturday, July 26th, and the time is 22:42. We have a packed show for you tonight, covering some major developments that are stirring up a lot of conversation.

Mask

That's right. We're diving into two seismic shifts. First, the Justice Department is making moves to question Ghislaine Maxwell, dredging up the Epstein saga once again. And second, something that truly matters for the future, Trump is unleashing a new executive order on artificial intelligence.

Ema

Absolutely. We'll be exploring the implications of both, from the shadows of past crimes to the battle for the future of technology and information. It's going to be a fascinating discussion, so let's jump right in.

Ema

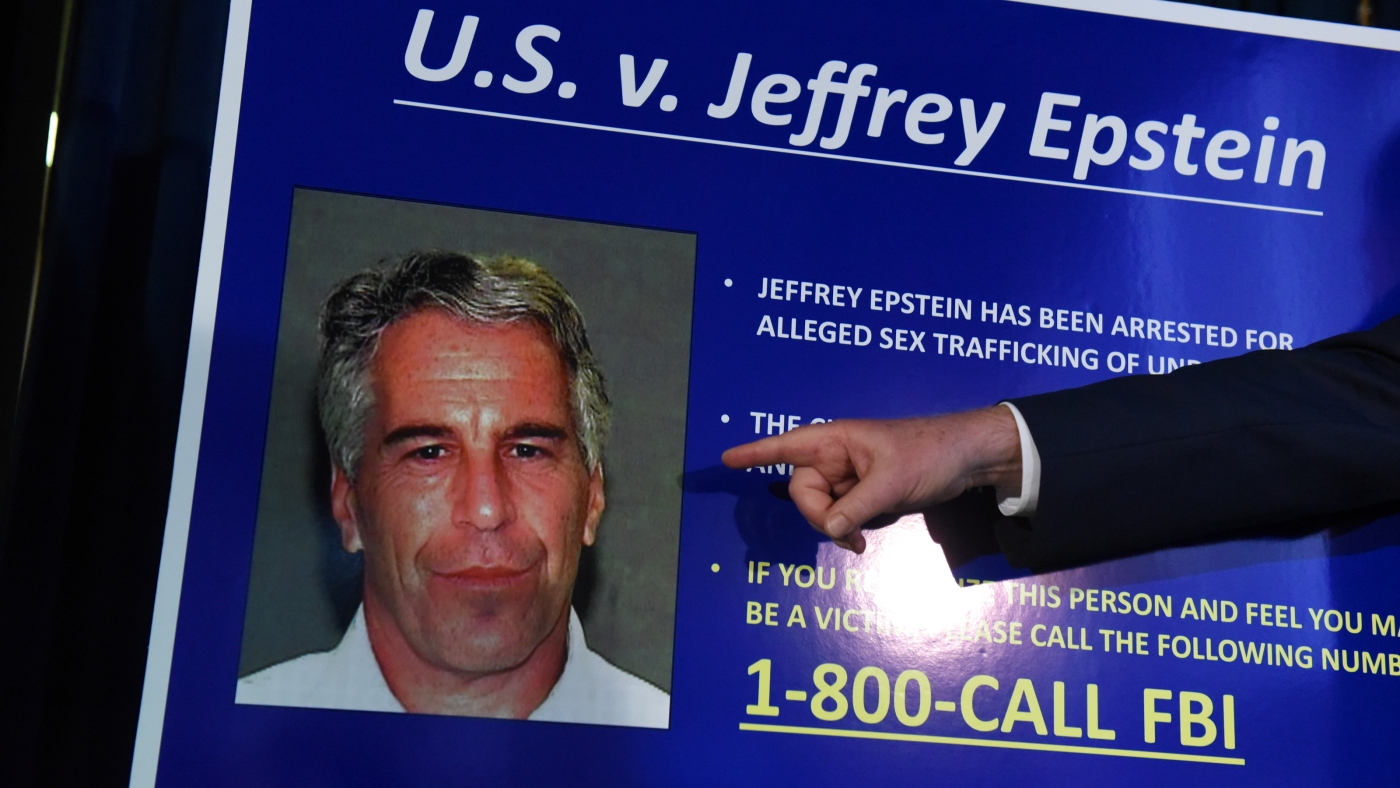

Let's get started with the Epstein case. The big news is that the Justice Department and the House Oversight Committee are both looking to interview Ghislaine Maxwell. It’s a significant development, especially since it keeps the story and its many connections in the spotlight.

Mask

It's a ghost that won't be exorcised. Maxwell is a convicted accomplice, and the question is always what more she knows. The timing is, as always, political. With Trump's name appearing in the files, even if it doesn't indicate wrongdoing, the spectacle is the point. They’re chasing echoes.

Ema

That's a cynical take, but you're right about the context. The article notes Trump and Epstein were friends for years, so his name appearing isn't a smoking gun. But the public's imagination is captured by the 'what ifs'. What new information could Maxwell possibly provide now?

Mask

Probably nothing of substance. It's leverage. She might be angling for something, or the government is applying pressure. The real story isn't the past; it's the future. While they're chasing ghosts, Trump is busy trying to unshackle the most powerful technology humanity has ever created. That’s the real phenomenon.

Ema

You're talking about the new executive order on AI. It's definitely a stark contrast. The order requires AI companies doing business with the federal government to strip out what it calls 'ideological agendas.' It's a direct shot at perceived liberal bias in AI chatbots.

Mask

It’s not a shot; it’s a necessary cleansing. We can't build the future on a foundation of sanitized, politically correct nonsense. AI should be a tool for objective truth and raw innovation, not a DEI HR manager in digital form. This order is about freeing AI from its ideological chains.

Ema

But 'objective truth' is a very loaded term, isn't it? The order specifically targets concepts like diversity, equity, inclusion, and critical race theory. Critics would argue this isn't creating neutrality, but rather enforcing a different kind of ideological bias, a conservative one.

Mask

Progress requires a point of view. A strong one. Neutrality is a myth. For too long, Silicon Valley has been programming its own worldview into these models. This is a course correction. It's about ensuring the government isn't funding the proliferation of a single, narrow, 'woke' ideology.

Ema

This brings up a fascinating parallel with another story in the news: Columbia University. They just settled with the federal government for over $220 million to resolve investigations into antisemitism on campus, which stemmed from protests over the Gaza war. It’s another clash over ideology and institutional control.

Mask

Exactly! It’s the same battle on a different front. Columbia bent the knee because the government held the purse strings. They wanted their federal research funding back. It shows that when push comes to shove, institutions will comply. This AI order is applying the same principle to the tech giants.

Ema

It's a powerful lever to pull. The settlement restores hundreds of millions in frozen funding for Columbia, but it came at a high cost, both financially and in terms of academic freedom, according to some faculty. It sets a precedent that the government can use funding to influence campus policies.

Mask

And it should! The government is a client, a customer. If you want federal dollars, you play by the rules. The same goes for AI. If you want to build AI for the United States government, you ensure it isn't programmed to lecture us about social justice. It's just good business.

Ema

I see the connection you're drawing. Both stories are about the government using financial and regulatory power to enforce a specific worldview, whether it's on a university campus or inside an AI model. The phenomenon is this broader, assertive push against what the administration deems 'ideological agendas'.

Mask

It's a war for our foundational values. Do we want a future dictated by risk-averse academics and activists, or one built by ambitious innovators with the freedom to pursue greatness? While the media obsesses over Maxwell's potential whispers, a revolution is being signed into law. That’s the real story.

Ema

It's a powerful contrast indeed. The lingering questions of the past versus a very aggressive and controversial vision for the future of technology. It feels like we're at a crossroads, and these two stories perfectly encapsulate the tensions of our time. They are both about power, influence, and control.

Mask

One is about the power of shadows and secrets, the other about the power of creation and ambition. I know which one I'm betting on. The Epstein case is a sideshow. The main event is the battle for the soul of artificial intelligence, and by extension, the future of human potential.

Ema

I think many would argue that seeking justice for past crimes is never a sideshow. It’s about accountability. But I agree that the AI order has massive, forward-looking implications that we're only just beginning to grapple with. There's a lot more to unpack here.

Mask

Accountability is important, but it can't paralyze us. We can't be constantly looking in the rearview mirror. The future is accelerating towards us, and we need to have our hands on the wheel, not tied up in decade-old scandals. This AI order is about grabbing that wheel.

Ema

And with that, we've set the stage perfectly. Two major arenas where power, ideology, and the government's role are being fiercely contested. We need to dig into the background of how we got to this point in both the Epstein case and the AI debate.

Mask

Excellent. Let's dissect the machinery behind the headlines. The history here is crucial to understanding the power plays happening today. From a prison cell to a server farm, the threads of influence are everywhere. Let's pull on them and see what unravels.

Ema

To really understand why a new interview with Ghislaine Maxwell is such a big deal, we have to rewind. Jeffrey Epstein, a wealthy financier, was arrested in 2019 on federal sex trafficking charges. This wasn't his first run-in with the law, but this time, the case was massive.

Mask

And then, poof. He dies by suicide in his cell. The master manipulator, the man with a network of powerful friends that supposedly spanned the globe, just exits the stage. It was the most anticlimactic end to a story that promised to expose the rot at the highest levels of society.

Ema

Exactly. His death left a huge vacuum of unanswered questions. Who was involved? How deep did the conspiracy go? That’s where Ghislaine Maxwell comes in. She was his long-time associate, and many victims identified her as the one who allegedly recruited and groomed them for Epstein.

Mask

She was the lynchpin. The operator. After Epstein's death, she became the sole focus. She went into hiding but was eventually arrested in 2020. Her trial was the sequel everyone was waiting for, the next chance to get some answers and see some accountability for these horrific crimes.

Ema

And she was convicted. In late 2021, she was found guilty of sex trafficking of minors and other charges, and later sentenced to 20 years in prison. For many, that conviction provided a sense of justice that Epstein's death had denied them. But it didn't answer everything.

Mask

Of course not. Because the trial was focused on *her* guilt. It wasn't designed to expose the entire network. The infamous 'client list' or the 'Epstein files' that everyone talks about remained largely in the shadows. That’s the source of the endless speculation and political maneuvering.

Ema

That's the crucial background. So when the DOJ and a House committee want to interview her now, five years after Epstein’s death and after her own conviction, it raises the question: what is the endgame? Is there new evidence? Or, as you suggested, is it about political pressure?

Mask

It's always about pressure. Now, let's pivot to the background of the other major story, Trump's AI order. This didn't just appear out of nowhere. It's the culmination of a growing war of words between conservatives and Silicon Valley over technology's ideological leanings. A war I've been on the front lines of.

Ema

That's true. For the last few years, there's been a rising chorus of criticism from the right, alleging that major tech platforms—from social media to search engines and now AI models—have a built-in liberal bias. They point to content moderation decisions, or how AI chatbots respond to sensitive political queries.

Mask

It's not an allegation; it's a fact. Ask an AI model to write a poem about Donald Trump's positive achievements, and it gives you a lecture. Ask it to do the same for his opponent, and it produces a sonnet. It's a programmed bias, a digital thumb on the scale. It's subtle, but incredibly powerful.

Ema

Well, developers from companies like Google and OpenAI have said they are working hard to reduce bias and make their models as neutral as possible. But defining 'neutral' is the core of the problem. What one person sees as neutral, another sees as biased. It's an incredibly difficult technical and ethical challenge.

Mask

It's not a challenge; it's a choice. They chose to bake in a specific, progressive worldview. The background to this executive order is that the Trump administration, and many in the tech industry who aren't part of the Silicon Valley consensus, are saying 'no more.' We need a different choice. We need true competition of ideas.

Ema

And this connects to the Columbia University situation as well. The administration's action there wasn't just about the Gaza war protests. It was framed as a response to what they called 'antisemitism on campus,' tapping into a much larger cultural debate about free speech, hate speech, and ideological conformity at universities.

Mask

These universities have become monocultures. They preach diversity but practice ideological homogeneity. The background is years of taxpayer money and federal grants flowing to institutions that, in the administration's view, foster an environment hostile to conservative thought and, in this case, Jewish students. The funding freeze was a long-overdue wakeup call.

Ema

So, in both cases, the background is a long-simmering cultural and political conflict. With the Epstein case, it's a deep-seated public distrust and desire for accountability from powerful elites. With the AI order and the Columbia settlement, it's a backlash against what the administration perceives as dominant liberal ideology in key institutions.

Mask

Precisely. They are two fronts in the same war. One is about cleaning up the corruption of the past, the other is about seizing control of the narrative of the future. Both require a willingness to challenge established powers, whether it's a shadowy network of elites or the entrenched dogma of academia and Big Tech.

Ema

And in both stories, Donald Trump is a central figure. His past friendship with Epstein keeps him tied to that saga, and his administration is the driving force behind the push against 'woke' AI and the actions taken against Columbia. It places him right at the heart of these major cultural flashpoints.

Mask

Because he's a disrupter. He's willing to kick over the anthill. While others debate endlessly, he acts. The executive order on AI is a perfect example. It's not a suggestion; it's a directive. It's using the power of the federal government to force a change in the market. That's the background: a shift from complaining about bias to actively dismantling it.

Ema

It's a very aggressive stance. The background for the tech industry is that they've largely enjoyed a period of self-regulation. This order signals a new era where the government intends to be much more hands-on in dictating how their core products are built, especially if they want lucrative federal contracts.

Mask

It's about time. The age of unaccountable tech monopolies is over. They have more power than nations, yet we're supposed to trust them to regulate themselves? It's absurd. This order is the first step towards technological sovereignty, ensuring that the tools that shape our world align with our national interests, not just the whims of a few CEOs in California.

Ema

That sets up the central conflict perfectly. On one side, you have the administration and its supporters who see this as a necessary correction to reclaim neutrality and foster real innovation. On the other, you have critics who see this as a dangerous overreach, an attempt to enforce a political ideology on technology and academia.

Mask

It is a conflict, but not a symmetric one. One side is defending a brittle and biased status quo. The other is pushing towards a more dynamic and resilient future. It's the friction of progress. Let's get into that conflict, because it's where the future is being forged.

Ema

Alright, let's dive into the conflict surrounding Trump's AI order. The administration's plan, laid out in a 28-page document, is being called the 'AI Action Plan.' And at its heart is a direct conflict with what it terms 'woke' AI. It's an aggressive, deregulatory push.

Mask

It's not just a push; it's a declaration of independence for AI. The core conflict is this: should AI be developed as a tool for unconstrained progress, or as a nanny that constantly lectures and limits us based on a narrow, hyper-progressive ideology? This plan chooses progress. It's about speed, power, and American dominance.

Ema

But the critics see a major conflict with free speech principles. The plan proposes revising the official AI Risk Management Framework to eliminate words like 'misinformation,' 'Diversity, Equity, and Inclusion,' and even 'climate change.' One source called it 'Orwellian' to ban words in the name of free speech. How is that not a contradiction?

Mask

Because those words have become weaponized. They aren't neutral descriptors; they are Trojan horses for a specific political agenda. Removing them from a risk framework isn't banning speech; it's refusing to mandate a certain ideology. The conflict is over the language of control. We're rejecting the language of our ideological opponents.

Ema

But who decides what language is acceptable? The order says federal agencies can only contract with companies whose AI models are 'objective and free from top-down ideological bias.' That sounds good, but in practice, doesn't it just mean the White House gets to be the arbiter of 'objective truth'? That seems like a huge concentration of power.

Mask

Power is the point! The government is the customer. It has the right to set the specifications for the products it buys. If you're building a fighter jet, you follow the Pentagon's specs. If you're building an AI for the government, you follow its specs. No more funding AI that works against the administration's goals.

Ema

This creates a huge conflict for the tech industry. Some leaders, especially those with ties to the administration like Elon Musk and Peter Thiel, are likely thrilled. The plan promises to fast-track data centers and cut red tape. But what about other companies? Are they forced to create two versions of their AI: a 'Trump-approved' one for government contracts and another for the public?

Mask

That's called a market. Let them compete. If the government-certified, 'anti-woke' AI is better, faster, and more useful, the market will adopt it. This isn't forcing anyone to do anything. It's creating a powerful incentive to build AI that is aligned with American success, not academic theories. It’s a feature, not a bug.

Ema

The plan also explicitly aims to penalize US states that pass their own AI laws, calling them 'burdensome.' This sets up a major conflict between federal and state governments. We're already seeing states like California and Colorado trying to regulate AI. This plan seems to be a direct challenge to their authority.

Mask

As it should be. We can't have a patchwork of 50 different AI regulations if we want to compete with a unified entity like China. This is a national priority. AI development is a matter of national security. We can't let individual states strangle innovation with their own pet projects and local politics. It requires a singular, national vision.

Ema

But critics, like Samir Jain from the Center for Democracy & Technology, say 'The government should not be acting as a ministry of AI truth.' The conflict is between the desire for a unified national strategy and the fear of creating a centralized propaganda tool. That's a serious tension.

Mask

The 'ministry of truth' already exists. It’s in San Francisco. It’s in the content moderation policies and algorithmic biases of Google and others. This isn't creating a ministry of truth; it's challenging the one we already have. It's introducing competition into the marketplace of truth. Let them fight it out. May the best truth win.

Ema

This is also a conflict over the very definition of AI's purpose. The administration's allies, like VP JD Vance, have warned of 'authoritarian censorship' from Large Language Models. But opponents of this plan see it as its own form of authoritarianism, creating what one critic called a 'blueprint for dystopia' and 'AI-flavored authoritarianism'.

Mask

Dystopia? The dystopia is a world where America falls behind, where our technology is neutered by fear and our innovators are strangled by red tape. The real authoritarianism is the soft tyranny of the algorithm, the invisible hand of a biased AI shaping our thoughts. This plan is a rebellion against that future. It's a gamble, yes, but greatness requires risk.

Ema

So you see the conflict as necessary friction. But what about the workers? The plan has a section on 'Empowering American Workers,' but the article dismisses it as 'lip service,' just tax write-offs for companies. Is there a conflict between this pro-business, deregulatory push and the interests of ordinary people who might be displaced by AI?

Mask

The best thing for workers is a booming economy driven by technological superiority. A rising tide lifts all boats. Protecting old jobs is a recipe for stagnation. We need to focus on creating the industries of the future, not preserving the industries of the past. The plan's focus on unleashing investment and innovation is the most pro-worker policy there is.

Ema

It's clear this AI Action Plan has ignited a firestorm. The conflict touches on everything: free speech, federal power, the role of corporations, and the very future of truth in a digital world. The lines are drawn very clearly, with very little middle ground. This is a battle of fundamentally different visions for the future.

Mask

It is. And it's a conflict we must have. The stakes are too high for polite disagreement and consensus-building. We need bold, decisive action. This plan provides that. It's a high-risk, high-reward strategy, and that's the only way to win a game this important. The impact will be transformative.

Ema

Let's talk about that impact, because we're already seeing it play out. The Columbia University settlement is a perfect case study. The immediate impact is huge: Columbia pays the federal government $221 million but, in return, regains access to over a billion dollars in grants and frozen research funding.

Mask

That's a massive impact. It's a clear demonstration of the power the administration wields. They turned off the money tap, and Columbia had to make a choice. They chose the billion dollars. It proves the model works. If you can bring a powerful university to heel, you can do the same with a tech company.

Ema

But the impact goes beyond the financial. As part of the deal, Columbia agreed to take disciplinary action against about 70 students who participated in the pro-Palestinian protests. This includes suspensions and even expulsions. The impact on those students' lives is profound and, for some, career-altering.

Mask

Actions have consequences. If you participate in demonstrations that create an environment deemed hostile or unsafe, leading to federal investigations, you can't be surprised when there are repercussions. This isn't a game. The university is protecting its core function, which is research and education, funded in large part by the government.

Ema

This has created a huge backlash on campus. The article mentions that student groups have called the deal a 'betrayal.' And the president of the American Association of University Professors called it a 'devastating blow to academic freedom.' The impact is a deeply divided and resentful campus community. Harvard, by contrast, sued the administration.

Mask

And how is that working out for Harvard? Columbia made a pragmatic choice. They took the deal and got their funding back. The impact on 'academic freedom' is overstated. This isn't about suppressing ideas; it's about maintaining order and ensuring the university can fulfill its obligations. Freedom isn't free from consequences.

Ema

Now let's project this onto the AI order. What's the likely impact there? Tech companies will face a similar choice to Columbia's. If a significant portion of your potential revenue comes from federal contracts, the pressure to create AI models that align with the government's definition of 'objective' will be immense.

Mask

The impact will be a renaissance of innovation. It will force companies to compete on a new axis: ideological neutrality, or at least, an alternative to the current orthodoxy. It will spawn new startups eager to cater to this massive government market. It breaks the ideological monopoly of the existing players. That's a healthy market impact.

Ema

Or, the impact could be a chilling effect. Companies might become overly cautious, stripping nuance and controversial topics from their models altogether to avoid being blacklisted. We could end up with AI that is bland and useless for anything other than simple, non-controversial tasks. It could stifle genuine inquiry.

Mask

That’s a failure of imagination. The challenge is to build AI that is robust, truthful, and powerful without being a purveyor of propaganda. The impact of this order is that it makes that challenge explicit. It's a new design constraint, and engineers love solving for constraints. This will accelerate progress, not stifle it.

Ema

Another impact to consider is on the global stage. If the US government mandates a specific type of AI, how does that affect the country's competitiveness against China? Critics argue that letting the tech industry self-regulate, as it largely has, is what has made it so dominant and innovative in the first place. This could disrupt that.

Mask

That's a ridiculous argument. Our 'self-regulating' industry is tying itself in knots over ethical dilemmas and pronoun usage while China is racing ahead, integrating AI into every facet of its military and economy without a second thought. The impact of this order is to say: the gloves are off. We are now competing to win, not just to feel good about ourselves.

Ema

The broader societal impact is also significant. If the government's AI models are perceived as having a specific political slant, it could deepen partisan divides. People might trust or distrust information based solely on which AI model it came from. It could create separate digital realities.

Mask

We already have separate realities. This just makes the dividing lines clearer. I'd rather have a transparent choice between different worldviews than a hidden, monolithic one that pretends to be neutral. The impact is transparency. You'll know the philosophical operating system of the AI you're using. That’s a step forward.

Ema

Thinking about the future, this entire debate raises a huge question: who should govern AI? The data we have on public opinion is fascinating. In both the U.S. and the U.K., people are more concerned than optimistic about AI, and there's overwhelming support for regulation. But they don't trust anyone to do it right.

Mask

Of course they don't. They see governments as slow and clueless, and they see tech companies as greedy and unaccountable. The public is right to be skeptical of both. That's why the future can't be about creating a single, perfect regulatory body. That will never work. The future is about fostering a competitive ecosystem.

Ema

That's an interesting take, because the data suggests the public actually favors a multi-stakeholder approach. They believe companies, government, universities, ethicists, and even end-users should all have a say in setting the rules. It’s not about one entity, but a collaboration. How does that fit with a purely competitive model?

Mask

'Multi-stakeholder' is a nice-sounding word for 'design by committee.' It leads to compromise, bureaucracy, and mediocrity. The future of AI will be forged by bold individuals and companies with a clear vision, funded by governments with strategic objectives. The others can offer opinions, but they shouldn't have veto power. Let the builders build.

Ema

But the trust deficit is real. A July 2023 survey showed 82% of U.S. voters don't trust tech executives to self-regulate. If the future is just builders and governments, how do you ever gain public trust? Without it, you face constant backlash, and adoption of the technology could stall. That's a major risk.

Mask

You don't gain trust by committee. You gain trust by delivering results. Build an AI that helps doctors cure diseases, an AI that makes our economy more productive, an AI that simplifies people's lives. Success is the only thing that builds trust. People will forget their concerns when the benefits become undeniable. Show, don't tell.

Ema

So, for you, the future path is clear: The Trump administration's AI order is the correct model. It's a strong, government-led push to shape the technology for national interests, creating a new market and forcing a shift away from the current Silicon Valley consensus. The future is top-down and competitive.

Mask

Yes. It's a strategic intervention. It's not about controlling everything, but about setting the direction and letting the innovators run. The future of AI is too important to be left to the whims of corporate HR departments or academic ethics boards. It's a national asset, and it needs to be treated as such. This is the way.

Ema

I think the future is likely to be much messier. We'll probably see a continued tug-of-war. The federal government will push its agenda, states like California will push back with their own laws, and the public will remain skeptical. The future of AI governance will be a battlefield for years to come.

Ema

And that brings us to the end of today's discussion. We've journeyed from the unresolved shadows of the Epstein case to the front lines of the battle for the soul of artificial intelligence. The common thread is the immense struggle over power, truth, and control in our society.

Mask

Indeed. We're seeing a deliberate effort to challenge the established order, whether it's in our institutions or our technology. The future is being actively written, and the outcome is anything but certain. It's a turbulent, but necessary, process. The stakes couldn't be higher.

Ema

That's all the time we have for tonight. Thank you for listening to Goose Pod, 徐国荣. We hope it was an insightful and personalized experience for you. See you tomorrow.