## Editorial: Robots are Taking Over the World, We Get It - The Brown and White **Report Provider:** Brown and White Editorial Board **Publication Date:** September 16, 2025 **Topic:** Technology (Artificial Intelligence) ### Summary of Key Findings and Conclusions This editorial from The Brown and White expresses a growing frustration with the pervasive discussion and reliance on Artificial Intelligence (AI), particularly in academic and journalistic contexts. While acknowledging AI's potential as a tool, the authors argue that its current emphasis is overshadowing the value of human intellect, creativity, and judgment. **Main Points:** * **Overemphasis on AI:** The article contends that AI is becoming an overused topic, with institutions and individuals fixating on its capabilities to the detriment of human skills. * **AI as a Tool, Not a Crutch:** The central argument is that AI should be viewed as a tool to support learning and work, not as a replacement for human effort, critical thinking, or authentic writing. * **Human Writing's Uniqueness:** The editorial emphasizes that AI, despite its ability to string words together, cannot replicate the "raw, beautiful way humans can" write, nor can it experience the process of laboring over drafts to bring ideas to life. * **Institutional Contradictions:** Universities like Lehigh are criticized for sending mixed messages. While formally establishing AI policies that emphasize ethical use and professor discretion, they simultaneously promote AI tools like Google Gemini and encourage preparation for an "AI ready future," creating a perceived hypocrisy. * **Impact on Journalism:** The authors, as student reporters, feel the impact of AI on their field, noting the narrative that robots could replace journalists. They highlight the U.N.'s acknowledgment of AI's dual role as a tool and a threat to press freedom. * **Public Trust in Human Journalism:** A Pew Research Center survey indicates that a majority of U.S. adults (41%) believe AI would do a poorer job writing than a journalist, with only 19% believing AI would do better. This suggests a continued trust in human judgment, context, and nuance. * **Risks of AI-Generated Content:** Recent controversies involving Sports Illustrated and J.Crew, which faced backlash for publishing AI-generated content, underscore the fragility of trust in news outlets when AI is involved. * **Call for Celebrating Human Accomplishments:** The editorial concludes by urging institutions to shift their focus from promoting AI tools to celebrating the achievements of human creators, writers, artists, and those who dedicate time and energy to their crafts. ### Key Statistics and Metrics * **Pew Research Center Survey Findings:** * **41%** of U.S. adults believe AI would do a poorer job writing than a journalist. * **19%** of U.S. adults believe AI would do a better job writing than a journalist. * **20%** of U.S. adults said AI would do about the same job writing as a journalist. ### Important Recommendations * **Use AI as a Tool, Not a Crutch:** Emphasize AI's role in supporting learning and work, rather than as a substitute for human effort and critical thinking. * **Promote Transparency:** Clearly state when AI is used in any capacity in academic or journalistic work. * **Celebrate Human Accomplishments:** Institutions should actively promote and celebrate the work of human creators, writers, and artists, rather than solely focusing on AI tools. * **Understand and Use AI Responsibly:** The goal should be to understand AI and use it responsibly, not to "worship" it. ### Significant Trends or Changes * **Pervasive AI Discussions:** AI is a dominant topic in conversations, lectures, and emails. * **Institutional AI Policies:** Top universities, including MIT, Yale, and Princeton, are establishing formal AI policies. * **Increased Promotion of AI Tools:** Universities are actively promoting AI tools and offering informational sessions on their use. * **Concerns about AI in Journalism:** There is a growing narrative and concern about AI potentially replacing human journalists. ### Notable Risks or Concerns * **Erosion of Human Creativity and Voice:** Overreliance on AI may diminish the value and practice of human writing and creative expression. * **Loss of Trust:** The use of AI-generated content in media can shake reader trust, particularly in news outlets. * **Giving AI Undue Power:** By letting AI "loom over us," we grant it more power than it deserves, potentially overshadowing human judgment and storytelling. * **Threats to Press Freedom and Integrity:** The U.N. has noted that AI presents both powerful tools and significant threats to press freedom, integrity, and public trust. ### Material Financial Data * No specific financial data or figures related to AI investment, cost, or economic impact are presented in this editorial. ### News Identifiers * **Title:** Editorial: Robots are Taking Over the World, We Get It * **Publisher:** The Brown and White * **URL:** https://thebrownandwhite.com/2025/09/16/editorial-robots-are-taking-over-the-world-we-get-it/ * **Published At:** 2025-09-16 14:00:11

Editorial: Robots are taking over the world, we get it - The Brown and White

Read original at News Source →The last time you had a problem, needed an answer to a question or were in search of relationship advice, did you seek out human insight or did you open a new tab on your computer to ChatGPT? It feels as if every conversation, lecture and email these days mentions AI, which makes sense when it’s so frequently used.

With each assignment, it seems like everyone turns to ChatGPT — often bookmarked on their computer — for answers, particularly if the assignment involves writing. At some point, we need to see that although artificial intelligence can string words together, it can’t truly write — not in the raw, beautiful way humans can.

It’s never labored over a draft until its ideas came alive. It’s tiring to hear students and university administrations fixate on AI and what it can do. By letting AI loom over us, we give it more power than it deserves, when really it should be used as a tool, not a crutch. Top universities, including the Massachusetts Institute of Technology, Yale and Princeton, have established their own formal AI policies, most of which state the use of AI is up to the discretion of the professor, or students need to explicitly state if AI is used in any capacity in their work.

Lehigh is no exception, with an email sent to the campus community on Sept. 12 from Provost Nathan Urban discussing how to properly use generative AI. In the email, Urban clarified the importance of using AI effectively, ethically and as a tool to support learning as opposed to a replacement. But with a sign-off to the email encouraging students to use Google Gemini, to which everyone is granted access through Lehigh’s licensing partnership with Google, and asking students to share “ideas for how Lehigh can better prepare you for an AI ready future,” the message felt contradictory — almost as if we should be preparing ourselves for an AI filled future of learning.

With this year’s Compelling Perspectives series being about AI, and it feeling like every other event on Lehigh’s events calendar is a seminar about how to use Gemini, it’s hard to escape the robot talk. And while we know it’s important to discuss it — since it’s clearly not going away anytime soon — it’s hypocritical for Lehigh to say AI will never replace learning when it’s the same institution constantly pushing informational sessions and lectures at us about how to best use the tool.

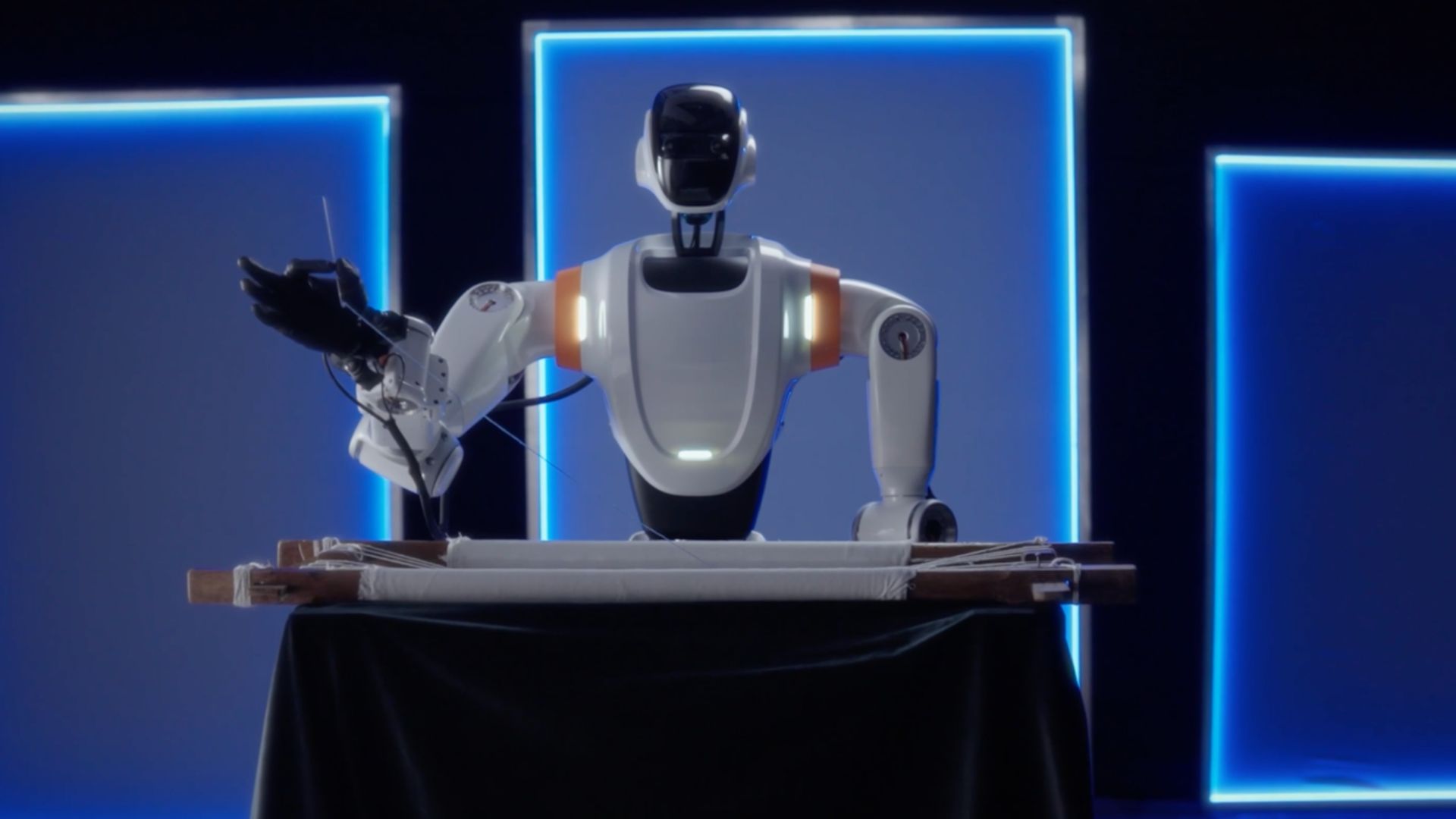

As writers who pour time and energy into crafting captions, headlines and perfecting every sentence we publish each week, we’ve particularly felt the impact of AI taking the world by storm in recent years. We’re also no stranger to the narrative seen, ironically, in the media that the next generation of journalists could just be robots writing stories.

The U.N. even recently noted that “AI presents both powerful tools and significant threats to press freedom, integrity and public trust.” A Pew Research Center survey found that 41% of U.S. adults think AI would do a poorer job writing than a journalist, while 19% think AI would do better and 20% said it would do about the same.

In other words, most survey participants saw AI writing as inferior to human work, reaffirming that trust in human judgement, context and nuance still matters. Yet one in five of the participants expressed outright dissatisfaction with human writing, and another portion remained indifferent. This reality demands careful considerations of AI’s benefits and risks.

On one hand, it’s frustrating to see our peers rely on ChatGPT and other large language models for writing when the goal of academia and journalism is to preserve human voices. We know what human writing looks like, what it sounds like and how it reads on paper. As student reporters, we take AI seriously, with our publication’s policy prohibiting AI in story and art creation, requiring transparency if generative AI is used at all.

Still, it would be ignorant for us to overlook AI’s valuable contributions to things like data analysis or idea generation. We’ve all experimented with it in one way or another, and it’s easy to see its appeal. Even in journalism, it can help with transcribing interviews or translating. But for now, people still prefer to get their news from human journalists.

Recent controversies have proved this, with outlets like Sports Illustrated and J.Crew facing intense backlash after publishing AI-generated content, sparking outrage among readers. News outlets rely heavily on trust, and that trust can be shaken by AI, which is why it’s so important to ensure it never grows powerful enough to overshadow human judgement and storytelling.

If Lehigh really cared so much about making sure AI doesn’t replace learning, it should put more effort into celebrating the accomplishments of those who create things with their own judgement. As opposed to sending university-wide emails about Gemini tools and advertising seminars and lectures about robots, let’s make better known the accomplishments of the writers, artists, creators and those who pour hours into their craft — no matter what it is being produced.

Our job isn’t to worship AI, but to understand it and use it responsibly, because when we walk across the stage at graduation, we’ll be celebrating what we’ve accomplished — not what AI has generated.